Neural network vs Crazy cats and dogs

Deep neural networks can recommend your next purchase, recognize cancer cells… but who cares? Here we focus on an age-old existential question: how accurately can they classify crazy cats and dogs pictures?

Overview

5 years ago, it would have taken a army of computer scientists to implement an algorithm that can accurately and instantly recognize a cat from a dog in a random picture. It’s now surprisingly easy to achieve extremely high (99.7%) accuracy using the 2016 ResNeXt50 architecture, as demonstrated in the fast.ai lesson 2. That CNN (convolutional neural network) was trained on ImageNet and a data set of 12,500 cats and 12,500 dogs in various poses and environments. I was curious how well it would hold against new weird (and funny) cats and dogs pictures. Would the 99.7% accuracy hold? What pictures wouldn’t work, and why? Would adding these new pictures to the validation data set possibly improve the accuracy on the original data set?

New data set

My wife Misako kindly found 108 new specimen - some of the funniest and weirdest the internet could offer. Following is a sample. The full list of 54 cats and 54 dogs is at the very bottom of this post.

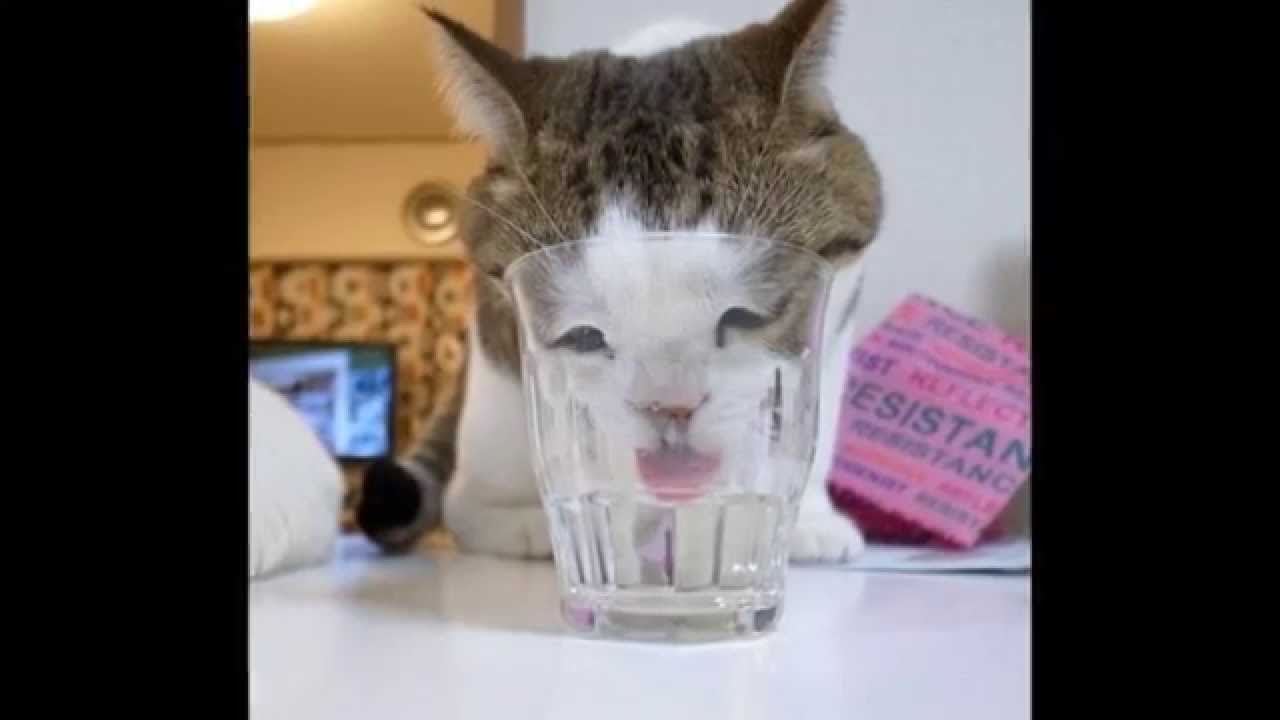

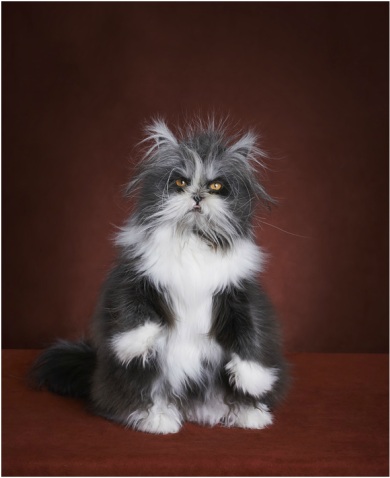

Sample crazy cats:

(The 2nd, 6th and 8th cats have clearly deformed faces - I thought these were most likely to result in mistakes.)

(The 2nd, 6th and 8th cats have clearly deformed faces - I thought these were most likely to result in mistakes.)

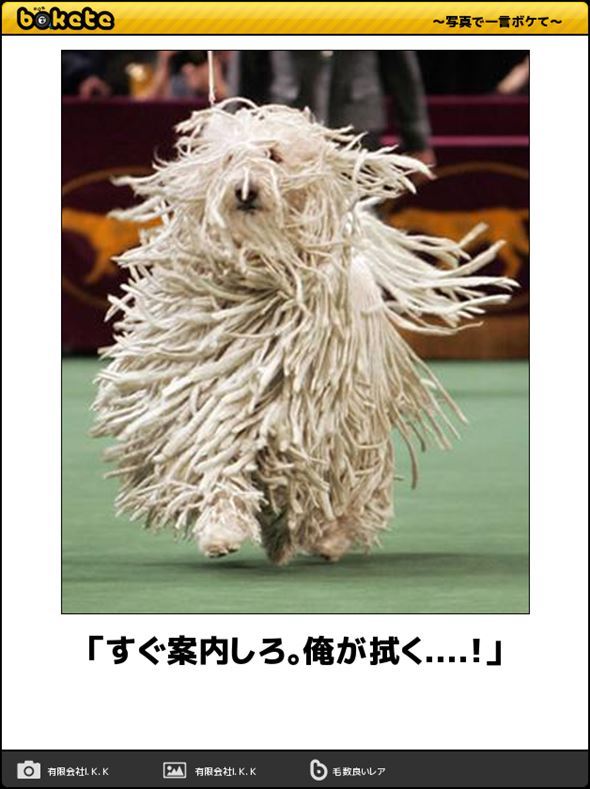

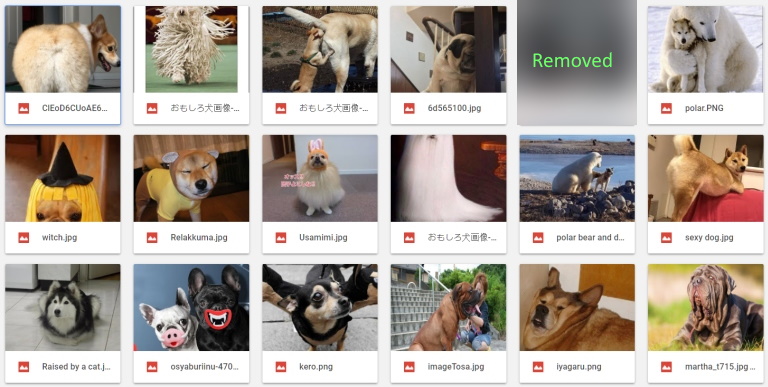

Sample crazy dogs:

(Yes, that 3rd picture is a small dog whose face is buried in the ass of a larger dog.)

(Yes, that 3rd picture is a small dog whose face is buried in the ass of a larger dog.)

Procedure

For simplicity, I simply added the new pictures to the validation folders and re-ran the training and prediction from the original fast.ai notebook. In total, training took 30 minutes on my NVIDIA GTX 1070. Prediction on 10,500 validation images took around 1 minute. That’s an average of 35 predictions per second, with ~2100 validation images * 5 TTA (data-augmented) samples per validation image. I made the following changes:

- I deleted a few incorrect images from the original validation data set, e.g. pictures with both a dog and a cat, pictures of signs that were neither a cat nor a dog. (BTW I verified that this didn’t eliminate the remaining misclassified validation images.)

- I kept the original ~1000 validation dogs, and 1000 validation cats, to which I added 50 and 70 new ones. The resulting validation accuracy is blended.

Result

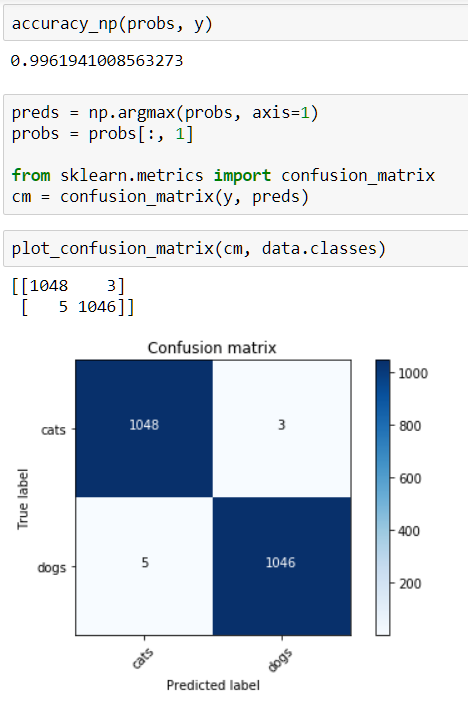

Amazingly, the accuracy stayed the same, at 99.67%:

Misclassified cats

None of my personal guesses ended up confusing the CNN. Here are the 3 cat pictures that did:

(Click an image to zoom.) I wouldn’t blame the CNN for the third cat… It looks like a devil and I suspect it’s actually CGI (Computer Graphics).

Interestingly, all the previously-misclassified original validation cat images were correctly classified this time around - so this extra validation data clearly helped the neural network to generalize its concept.

Misclassified dogs

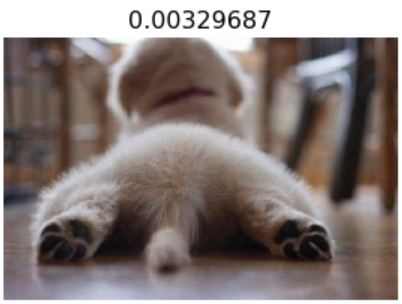

5 dogs were misclassified. The first two are from the new data. They are both puppies bums taken from behind. I suspect the original data set didn’t include this viewpoint or only included the bums of older dogs.

The last three were from the original data set:

I find this 3rd image most intriguing. I’m not sure what confused the CNN here. I plan to do more investigation later on, starting with running the experiment again to see if this is just bad luck.

Jeremy from fast.ai offered good explanations for the last two pictures:

- The 4th dog image has a wide aspect ratio. The CNN by default only runs on square input images. Without TTA (test time augmentation), only the cropped center of the image would be processed. The center of this dog can easily be mistaken for a cat. TTA randomly picks 5 slightly rotated and scaled sub-windows, and averages the predictions. I suspect that this time around the head of the dog wasn’t picked. I also think that a smarter combination of the 5 TTA samples (to weight according to the prediction confidence) would improve the result.

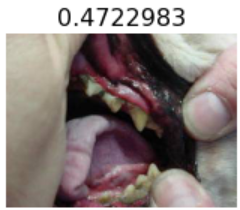

- The 5th image is a dog’s jaw. A few more images in the training/validation data could easily fix this problem.

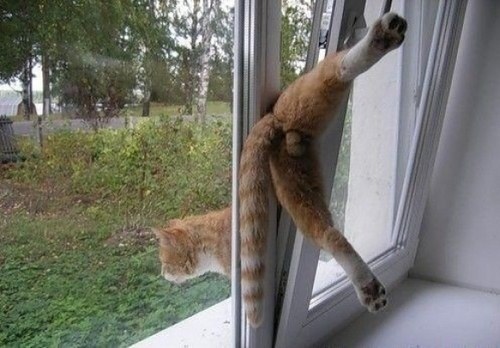

Craziest cats

This is what you really came for, right? ;-) Here it is:

Craziest dogs